Our Projects

01.

Project LMM -

Large Motor Unit Action Potential Model

We’re building a new kind of platform, a connective layer between humans and computers.

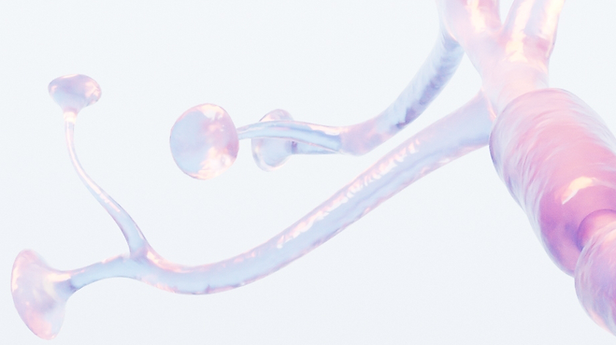

At its core is the Large MUAP Model (LMM) - a neural model that transforms real-time neuromuscular signals into structured, machine-readable data. It enables scalable personalization, predictive interaction, and biometric-level understanding.

The Large MUAP Model (LMM) is a domain-specific neural model trained on high-resolution motor unit activity from the wrist. It collects and analyzes continuous streams of neuromuscular signals - detecting the firing patterns of individual motor units and mapping them into a structured, high-dimensional “alphabet.”

This alphabet encodes subtle patterns of movement, underlying intent, and physiological state, creating a new language through which machines can interpret human input with unprecedented fidelity.

By translating raw electrical signals into structured representations, the LMM serves as both a decoder and a learning engine - bridging the gap between the body’s internal signals and the external digital world.

This foundational model doesn’t just recognize gestures; it understands the dynamics behind them .And it gets smarter with every signal.

The LMM continuously learns from real users in real contexts - refining its understanding of how neural patterns vary across individuals, environments, and time. As more data flows through the system, its ability to deliver precise control, early-state detection, and adaptive behavior only grows.

The result is a foundational shift in how computers understand us. Control becomes smoother, prediction becomes sharper, and monitoring becomes deeper - non-invasive, real-time, and closer to the source.

02.

Project

Mind Tap

From Gesture to Intent: Pushing the Boundaries of Neural Input

We’re exploring the next frontier in neural interfaces: enabling control through pure intent - without visible movement. Our team is advancing a wrist-worn sensing system capable of detecting neural signals beneath the threshold of physical action - bridging the gap between motor intent and digital execution.

This research pushes beyond micro-gesture classification into a new category of intent-based interaction. Our aim is to achieve BCI-level responsiveness - without the need for implants or high-friction setups.

The outcome: a lightweight, non-invasive wearable that delivers high-fidelity, silent input - unlocking intuitive, low-effort control for the next generation of spatial computing. We believe neural input should feel as natural as thought itself. And we’re building the infrastructure to make that real.